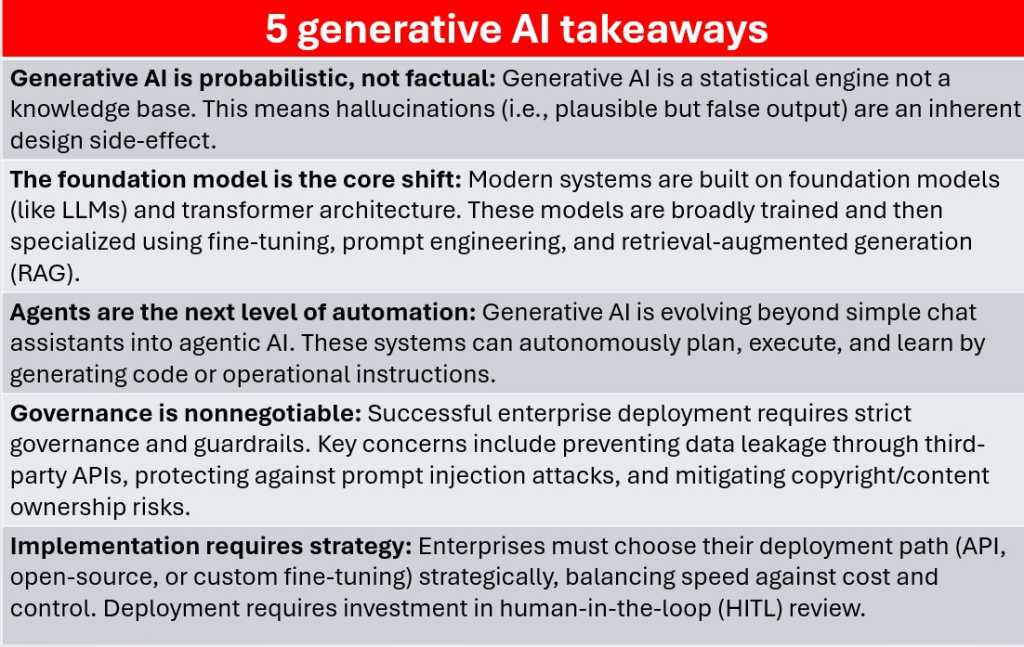

Every generative AI system, no matter how advanced, is built around prediction. Remember, a model doesn’t truly know facts—it looks at a series of tokens, then calculates, based on analysis of its underlying training data, what token is most likely to come next. This is what makes the output fluent and human-like, but if its prediction is wrong, that will be perceived as a hallucination.

Foundry

Because the model doesn’t distinguish between something that’s known to be true and something likely to follow on from the input text it’s been given, hallucinations are a direct side effect of the statistical process that powers generative AI. And don’t forget that we’re often pushing AI models to come up with answers to questions that we, who also have access to that data, can’t answer ourselves.

In text models, hallucinations might mean inventing quotes, fabricating references, or misrepresenting a technical process. In code or data analysis, it can produce syntactically correct but logically wrong results. Even RAG pipelines, which provide real data context to models, only reduce hallucination—they don’t eliminate it. Enterprises using generative AI need review layers, validation pipelines, and human oversight to prevent these failures from spreading into production systems.