Frank Crane wasn’t talking about open source when he famously said, “You may be deceived if you trust too much, but you will live in torment if you don’t trust enough.”

But that’s a great way to summarize today’s gap between how open source is actually being consumed, versus the zero trust patterns that enterprises are trying to codify into their DevSecOps practices.

Every study I see suggests that between 90% and 98% of the world’s software is open source. We’re all taking code written by other people—standing on the shoulders of giants—and building and modifying all that code, implicitly trusting every author, maintainer, and contributor that’s come before us.

Before we even start writing our code, we’re trusting that the underlying open source code was written securely. Then when we use it, we’re trusting that the authors were not malicious, and that the code wasn’t tampered with before we installed it. That’s the opposite of zero trust. That’s maximum trust.

Let’s take a look at the evolution of software distribution, and where new roots of trust need to be planted to support the next decades of open source innovation.

Open source software is secure

Early days, open source’s detractors stirred up a lot of fear, uncertainty, and doubt around its security. Their argument was that proprietary software’s source code was walled off from prying eyes and therefore more secure than open source, whose source code was readily available to anyone.

But open source has proven that there is a positive effect when you have source code transparency. The network effect of many eyes on source code reveals vulnerabilities faster and creates much faster cycles of remediation. The results speak for themselves: 90% of the known exploited vulnerabilities (in the CVE list maintained by CISA) are proprietary software, despite the fact that around 97% of all software is open source.

It’s too easy whenever there is a major vulnerability to malign the overall state of open source security. In fact, many of these highest profile vulnerabilities show the power of open source security. Log4shell, for example, was the worst-case scenario for an OSS vulnerability at a scale and visibility level—this was one of the most widely used libraries in one of the most widely used programming languages. (Log4j was even running on the Mars rover. Technically this was the first intergalactic OSS vulnerability!) The Log4shell vulnerability was trivial to exploit, incredibly widespread, and seriously consequential. The maintainers were able to patch it and roll it out in a matter of days. It was a major win for open source security response at the maintainer level, not a failure.

And that achievement should be widely recognized. Compare that disclosure and fix time of a couple of days to the disclosure programs of firmware vendors or cloud providers that take 30, 60, even 90 days to roll out fixes for something like this in the best case. However, enterprises have lagged in response to take necessary action against the vulnerability. According to a recent report from Veracode, more than one in three, or 38%, of applications running Log4j are still using vulnerable versions of the program.

But open source requires trust

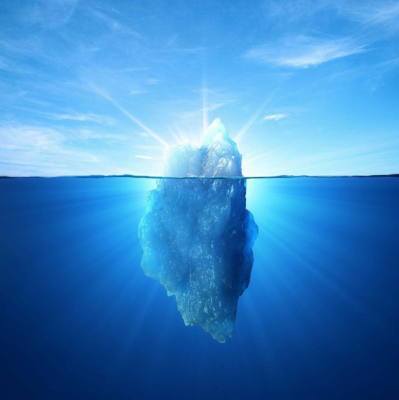

When you first start building your software, that’s just the tip of the iceberg above the surface. You’re building on millions of lines of free software built for the public good, for free. That’s possible because of trust.

Linux distributions—in addition to handling the compilation of source code and sparing OSS users from having to compile and debug—should be credited for the huge role they played in establishing that trust. When you use binaries from a Linux distribution, you’re trusting upstream maintainers who write the source code and the distribution. That’s two different sets of people. The Linux distros understood this and really advanced the state of the art in software security over the last few decades, by pioneering approaches to software supply chains, and by establishing strict methods for vetting package maintainers.

Debian is one of the most notable in the field for its sophistication in codifying trust within the distro. Debian uses the PGP key sign system, where only if enough maintainers sign the keys for encryption events, do they get added to the Debian keyring. These signatures get checked as new packages are uploaded, and then the Debian distribution itself re-signs all the packages that have been onboarded. So when they are published from Debian, users can check those signatures no matter where they find those packages and ensure those packages came through a Debian distribution of maintainers that they trust and that the packages haven’t been tampered with on the way.

It’s a model that’s worked phenomenally well.

And OSS dependencies have outgrown trust models

But today, most software consumption is occurring outside of distributions. The programming language package managers themselves—npm (JavaScript), pip (Python), Ruby Gems (Ruby), composer (PHP)—look and feel like Linux distribution package managers, but they work a little differently. They basically offer zero curation—anyone can upload a package and mimic a language maintainer. And how do you know what you are trusting, when a single package installation often installs packages from dozens of other random people on the internet?

Docker further multiplied this transitive trust issue. Docker images are easy to build because they use the existing package managers inside of them. You can use an npm install to get npm packages, then wrap that up into a Docker image. You can do an app install with the package managers of any language, then ship it as one big TAR ball. Docker recognized this trust gap and to their credit tried to bridge it with something called Verified Builds, which evolved into a feature inside Docker Hub.

These Docker Verified Builds were a way for users to specify the build script for a Docker image in the form of a Docker file, in the source code repository. The maintainer writes the Docker file, but then Docker does the build, so what you see in the image is the same code from its original maintainers. Docker rolled this out years ago and is continuing to improve it, so they deserve a big shout out.

Docker is not the only player in this trust web for cloud-native software, though; it gets more complicated. There’s a layer on top of Docker that’s commonly used in the Kubernetes realm. Helm lets you package up a bunch of Docker images and configuration. It’s a package of packages.

So if you install the Helm chart for Prometheus, for example, you are likely also to get a bunch of other images from random personal projects. You might be sure you are getting Prometheus from the Prometheus maintainers, because the artifact hub shows it came from a verified publisher, but Prometheus often has dependencies that do not come from verified publishers.

The official Helm Charts Repository maintained by Helm’s original creators was a curated attempt at codifying trust in these images. It had the potential to bring to cloud native apps the same type of security curation provided by Linux distros. But unfortunately it proved too hard to scale, and took a more federated model like the programming language package managers, where each project maintains its own Helm charts.

All of these layers of transitive dependencies are what makes up a major portion of the modern software supply chain security problem and one of the juiciest areas for malicious actors to exploit. This is the front line of the new battle to preserve all the great trust in open source that has been built up through the decades.

Making software secure from the start

Software distribution is dramatically different than it was 20 years ago, when you used to buy shrink-wrapped software in a store like CompUSA or Best Buy. When you purchased a box of software, you knew exactly what you were getting. You knew that it came from the person it was supposed to, and that it hadn’t been tampered with.

As software distribution shifted from CD-ROMs to the internet, Linux distributions proved astonishingly successful at providing trust.

When Log4j and SolarWinds showed some of the cracks that new software supply chain attacks are exploiting, teams started locking down build systems, using frameworks like SSDF and SLSA, and checking software signatures produced by Sigstore (now the default software signing method used by Kubernetes and all of the major programming language registries). That’s progress.

This open source security domain is complex. We’re talking about decades-old trust models up against 372 million repositories on GitHub alone!

There’s still a major disconnect between known CVEs and developers unwittingly re-installing them through transitive dependencies. There’s still a whole class of vulnerabilities that live entirely outside of Linux distributions and therefore do not get picked up by security scanners. It’s hard enough for software consumers to realize when they are running malicious software packages in the first place, let alone being nimble enough to rapidly patch them with updates when available.

In 2024, we’re going to see the software supply chain security gaps close between CVEs, Linux distros, and software packages. We’re going to see a major reduction in the nonessential software artifacts that ship inside of both distros and images, and distros themselves starting to compete on the basis of how efficiently they can ship vulnerability fixes as rapidly as possible, like Wolfi.

We’re going to start hearing security teams align their expectations of application infrastructure security with concepts of zero trust, and no longer accept a bunch of cruft in their distros and images that may introduce cracks and back doors to transitive dependencies. We’re going to see security teams that want to get closer to the kernel with technologies like eBPF and Cilium, and to use the run-time security policy enforcement that projects like Tetragon can provide. And we’ll see this lockdown accelerated by AI use cases that are asking enterprises to trust even more frameworks with even more transitive dependencies, including specialized GPU architectures and ever more nuanced back doors.

For developers to continue to enjoy the freedom of choice of their favorite OSS components that they build on top of, software distribution needs a re-think. We need more uniform ways to build, package, sign, and verify all of the source code that goes into packages in containers, and the distribution of these cloud-native components, while keeping them minimal and secure by default. They’re all sitting at the top of the stack, which is the perfect place to refactor roots of trust that are going to support the next decades of open source innovation in the cloud-native world. It’s time for a de facto standard safe source for open source software.

Dan Lorenc is CEO and co-founder of Chainguard. Previously he was staff software engineer and lead for Google’s Open Source Security Team (GOSST). He founded projects like Minikube, Skaffold, TektonCD, and Sigstore.

—

New Tech Forum provides a venue for technology leaders—including vendors and other outside contributors—to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to doug_dineley@foundryco.com.